A few days ago, a reader of my blog sent me a message:

“Hi Erich, I am reading you since a lot of years. I think you are a pillar of my professional career. Thanks for this. Let me ask you now: what do you think about LLM and coding with LLM in embedded? My employer thinks it time to stop to hire people, because in 1/2 years everything will be substituted by AI. I am not on the same page. Are you using LLM for coding? What do you think about it? Thanks in advance.”

TL;DR: LLMs are changing and improving, making good engineering and education even more important. Studies show that AI can be useful, but productivity will not always increase. AI coding means more critical thinking and responsibility, not less. Engineering and education needs to adopt and change. This includes assessments and didactic, back to paper and defending the work. Learning how to learn is getting the critical skill in the age of AI.

In this article I’ll have collected my thoughts and observations.

Outline

Over the last days, I have found some time to ponder on this that topic. As you might be aware: I’m working both in engineering, research and education. And it has been a very challenging year 2025.

I do use LLMs (Large Language Models) for coding and beyond. I’m experimenting with this machines, as probably everyone else in the industry. What I want to share here are some examples and observations. About my own usage of LLMs and what I see from others

I think it is amazing to see what results can be produced with that technology. But I think we need to be careful, because many things of the current hype is marketing. Only now research started to show some results: what works, what not and where are the problems. Decision makers (as in above message) need to understand the technology behind these machines.They need you know what this machines can do well. And what not. Otherwise they will make the wrong decisions.

I don’t want to give here an introduction to LLMs. There are plenty and good resources out there. The key point to remember is that while the models are still evolving, the core concept remains. I’m not an ‘expert’ on all the different AI models and implementations. I don’t have to be. I have experience with it. I have used it, implemented and trained models too.

In the next parts, I go through the following:

- Which LLMs I’m using, and why

- The emotions and irrational parts of AI

- How embedded engineering might be different

- The shift in education, didactic tools and why there is a ‘go back to paper exams’

- Examples and my experience with LLMs

LLM Usage

I’m using LLMs nearly daily. I never (ever!) use it with confidential information, sensitive data or personal information. I do not use it for any ‘automatic’ tasks or ‘agents’, without me having the final say and responsibility.

I’m not much using LLMs for direct coding, and if I do, I do use Claude in combination with CoPilot. Here again: I’m fully responsible for the code, which includes clarity about the IP of the code used.

For code documentation related things I’m using CoPilot. For things like describing an interface, adding documentation headers or rewording the comment text. Or providing test cases.

I’m using Claude for coding snippets review or idea and concept findings. It usually gives better results than for example ChatGTP. It works well for ‘known’ concepts:

I’m using ChatGTP for brainstorming or idea findings. Things like ‘find me a cool name for that project which does X’.

I’m using Perplexity if I need more fact based information, for example if I need citations on good web links.

Or I’m using the AI add-on in WordPress to suggest me better title for this article.

I try to use LLMs not to give me answers or solutions. I like to ask the LLM to formulate a question for which I have to find the solution. This is where LLMs shine for students and for learning: ask it for the questions! Then find some solutions and check and discuss it with peers.

The LLM then can offer ideas for improvement to the solution I have found. This, merged with critical thinking, this is a true value add. I use the LLM as virtual sparring partner.

Emotions!

Personally, I have mixed feelings about LLMs and AI. I see them as useful tools, if used correctly. I feel while the technology is still evolving and shows amazing things.

What annoys me are all these AI generated spam emails. Why let AI agents sending emails or post in forum? Rob Pike shared his experience a few days ago on bsky.app:

On the other side, some people seem to be emotionally attached to LLMs. I mean that they take failed examples of LLMs very personal. As if they have to ‘defend’ the machine. It is not the fault of the machine, it is only that I have not used the right prompt. Or that it only has been a problem in the past, but model xyz.v200 has it all solved and fixed.

Already a Religion?

Sometimes it feels to me that some see the machine as the new ‘super intelligence’ we have to trust. It feels to me like a ‘religion’ some time. That ‘Deus in Machina‘ or ‘AI-Jesus‘ in the St. Peter’s church in Lucerne at least has been declared as an art (not confession!) installation. Results about the 900 conversations have been published in a report.

I find it bizarre. Some ‘evangelists’ in forums are claiming ‘the end is near.’ They say AI will take over all software development?

Future of Software Engineering?

The real harm of that AI hype and wild claims will deter young engineers. They might shy away from learning computer science, programming or a related areas. But computer, software engineering and embedded development is much more than ‘coding’. You have to understand problems, something AI is not capable to do. You have have to be innovative and creative in solving real and new problems. The future belongs to problem solvers, critical thinkers and not ‘statistical parrots’.

The hype and claims of startup AI companies already seem to have a negative impact. Decision makes or hiring managers get confused. See that reader message at the beginning of this article. Or that software engineering graduates from Stanford face challenges to find a job:

“A common sentiment from hiring managers is that where they previously needed ten engineers, they now only need “two skilled engineers and one of these LLM-based agents,” which can be just as productive, said Nenad Medvidović, a computer science professor at the University of Southern California.” (Los Angeles Times)

From Bachelor to Master

The issue with this is real and significant. How can we grow new skilled engineers without hiring graduates? If graduates do not get a chance to learn on the job and get experience?

What I see is an increased interest in the Master of Science in Engineering program. Instead of entering the job market after graduation, some students decide to extend the Bachelor degree with a Master. They do this with an extra two-year Master program. The Master degree goes above and beyond the basic skills. It is about research in new areas. Asking critical questions and solving hard problems. Something today’s LLM technology is not capable to do.

Embedded Engineering

What I see is that ‘pure software engineering’ is more affected than ‘electrical engineering’ or the area embedded systems. This can change in the future. LLMs can be quite effective if your engineering job involves building web pages. They are also useful for mobile applications or writing scripts to manipulate data. They are especially effective at least on a prototype level. So if you are working in such areas, you might be in trouble to some extend.

At least for now, LLMs are great for ‘general’ purpose things. Because that’s what they are trained with, with the code found on the internet. And the more general, the better. But if it comes down to an actual and specific system, things are more complex:

“It is more difficult because you run into more uncommon tools and interfaces, and eventually you will need something novel. Like, AI can probably correctly guess most of the important registers on an stm32, but give it something more obscure (especially without an ARM core) and it won’t know shit but will still try to guess. You could give it the datasheet, but I suspect it would need you to at least tell it which part is important, and even if you do it will quickly forget what it has learned (that is, even in the same conversation it will start hallucinating again)” . (Reddit, Will AI take low level jobs)

This at least for now. But that might change if silicon vendors would start producing special models trained for their parts and data. Not sure if and how long this will take.

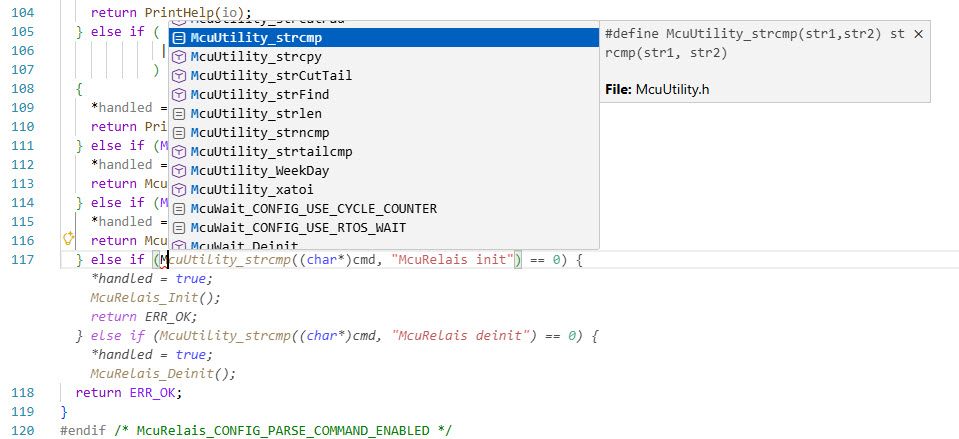

CoPilot Usage

I’m using Microsoft CoPilot for my coding in VS Code. Because of data privacy concerns, I have used it for open source code only.

I used it to document with comments the interface files. Or adding more comments to the code. With some review and fixes, that worked very well, and I felt documentation productivity went up.

It was not perfect. And it was wrong in some cases. Because I knew the interface and the code behind it, it was not a big deal to get it fixed. If that would not have been some code I wrote, that certainly would not be a good way to work. Because it would have produced wrong output.

I used CoPilot for coding too. CoPilot very much adopted to my code style. It proposed code blocks which *mostly* made sense.

They were *kind of* in line with what I wanted to code. But not *exactly*. Most of the time, I ended up accepting the proposal. After that, I ‘fixed’ it to get it right. But I realized that somehow my productivity was not going up: it was going down. Same for my motivation. Instead of writing the code directly with the help of IntelliSense, I corrected the LLM produced code. Which is a frustrating task. At the end, I was constantly reviewing and fixing the LLM code:

“Not the AI solution becoming the reviewer of developer code, but the other way around. If you ask an AI solution or an LLM like, ’hey, I wanna do something,’ you essentially become the reviewer of the AI code.” (Memfault)

Where I find CoPilot very useful is for things like “propose me an alternative implementation of that function”. That usually triggers a thought process, similar during a code review. Will it replace code reviews with humans? I don’t think so, but AI augments the review and improvement process.

Experiment: Quadrature Encoder Code

A few weeks ago, I did an experiment with a class of students. Their task was to implement a quadrature encoder code for a rotary switch. I explained the concept and basics of a quadrature signal. And used Claude (see above) to dive in to the concept.

Then we asked ChatGTP4 to give us the code:

/* proposal to read quadrature encoder according to ChatGPT4 */

#include <stdint.h>

// --- Read your encoder pins here ---

#define READ_A() ((GPIOB->PDIR >> 2) & 1) // Example: PTB2 = A

#define READ_B() ((GPIOB->PDIR >> 3) & 1) // Example: PTB3 = B

// States: 0..3 encoded as AB (00, 01, 11, 10)

static int8_t quad_state = 0; // Current state (0-3)

static int32_t encoder_count = 0; // Signed position count

// Transition table (prev_state * 4 + new_state):

// - 0 = no movement or illegal transition

// - +1 = forward

// - -1 = backward

static const int8_t quad_table[16] = {

// New = 0 1 2 3 (00, 01, 11, 10)

0, +1, 0, -1, // Prev = 0 (00)

-1, 0, +1, 0, // Prev = 1 (01)

0, -1, 0, +1, // Prev = 2 (11)

+1, 0, -1, 0 // Prev = 3 (10)

};

void Quadrature_Update(void)

{

// Encode A and B as 2-bit number

uint8_t new_state = (READ_A() << 1) | READ_B();

printf("A:%d, B:%d\n", READ_A(), READ_B());

// Compute index into table

uint8_t index = (quad_state << 2) | new_state;

// Lookup direction

int8_t movement = quad_table[index];

encoder_count += movement;

quad_state = new_state;

}

int32_t Encoder_GetCount(void)

{

return encoder_count;

}

void Encoder_SetCount(int32_t v)

{

encoder_count = v;

}

/* ------------- end of proposed code ---------------- */

The above code proposed looks OK, right? It is close, but it is wrong. So I asked the students to use that solution and to test it with the debugger. Of course they noticed that it does not work as expected. So I asked them to fix it. Most of them needed more than 45 minutes to understand and fix the code. They asked ChatGTP to fix it. They only ended up with a different wrong code had to fix. Most agreed that they would have been faster without AI. But it was so tempting to use it, because it ‘looked right’, but they did not trust their own skills. And with adding more and more code they don’t understand, they add to the technical dept.

Productivity

But it seems in line with my experience. And some studies come to similar results. For example that AI code is producing 1.7x more issues:

If quality (code density, low power, code size, testing coverage, …) is not a concern at all (e.g. for a mock-up or proof-of-concept), AI can be valuable choice or addition. Or: AI can be ‘faster’, but quality suffers.

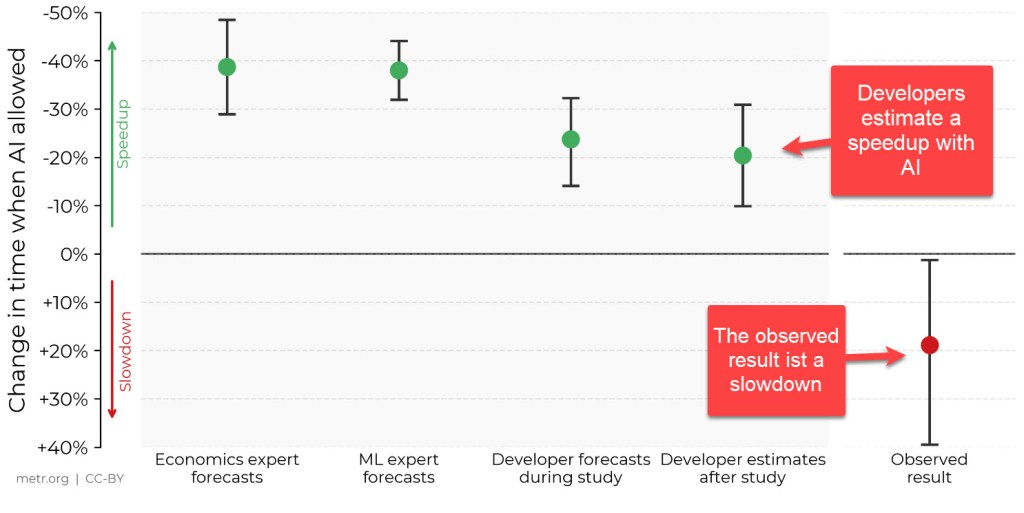

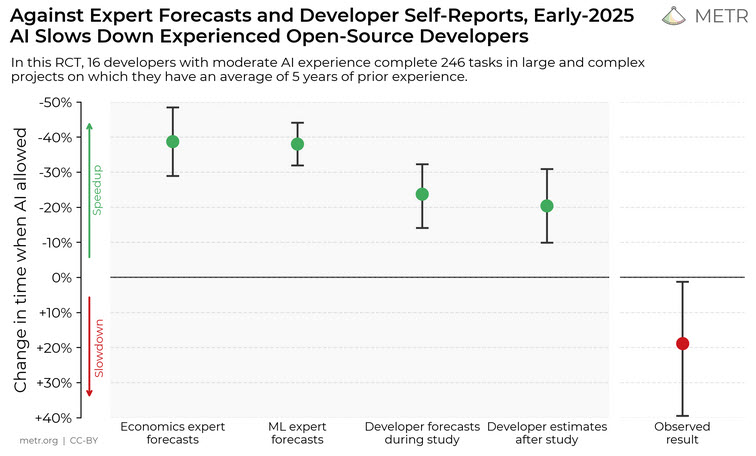

But are we really faster? Some people genuinely seem to believe that they have a performance boost using LLMs. A study shows a different result:

“Believing the AI tools would make them more productive, the software developers predicted the technology would reduce their task completion time by an average of 24%. Instead, AI resulted in their task time ballooning to 19% greater than when they weren’t using the technology.” (Fortune)

The study is available at Cornell University or from metr.org:

Research in “The Impact of Artifical Intelligence on Productivity, Distribution and Growth” shows that ‘adjustment costs’ can contribute to that. I find that ‘over-self-confidence’ and perception by the developers really interesting. And it matches what I’m observing in discussions I had with multiple engineering groups. But this is not bound to AI and LLM usage: humans tend to overestimate their skills. More than half of the population thinks they are ‘better and above average’ :-).

Critical Thinking

You probably know this: In the past, we navigated using landmarks and maps. This was before the era of GPS and navigation systems. Now many of us are lost if there is no internet or GPS signal available. Or we used to memorize many phone numbers, now we barely can remember our own phone number. Or with the invention of electronic calculators we lost the ability to calculate even simple math. Because the machine is offloading this from us, for god or bad.

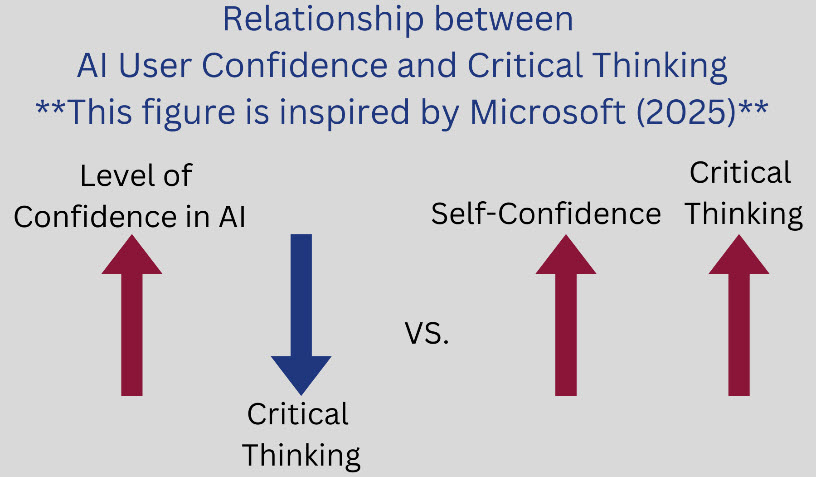

A similar thing is a research question. Are we losing the ability for critical thinking with the use of AI and LLMs?

Microsoft did a study about “The Impact of Generative AI on Critical Thinking: Self-Reported Reductions in Cognitive Effort and Confidence Effects From a Survey of Knowledge Workers”. It shows an interesting relationship between ‘confidence’ and ‘critical thinking’:

What engineers and students need to succeed long-term is critical thinking.

“No clever prompt we type into an AI tool will ever be able to replace human critical thinking – especially when tools like ChatGPT are often inaccurate. In reality, critical thinking becomes even more necessary in the age of AI, both to use it properly, and to do the necessary work behind the scenes to make it a more reliable tool.” (Forbes)

Trust and Responsibility

Students and junior engineers often trust the code produced by LLM systems. They seem to believe in it more than their own code. They get some code from the machine, and they believe it, and try to get it working.

Memfault hosted a good podcast “AI code means more critical thinking, not less” about this:

“We need to relearn to trust ourself. And the more skilled we are, the better we can use those LLM systems.” (Memfault)

“Those systems are really good systems for people with knowledge, people with skill, and they have to be really skilled because they need to trust their own skillset more so than trusting the LLM system.” (Memfault)

“I think we collectively get dumber because we lose critical thinking … we just believe the LLM, we just believe, as you just said, a non-deterministic system that is just putting the next best word in front of you, and we believe it.” (Memfault)

At the end: the engineer has to take responsibility into what he delivers. This will be very difficult if this is a huge blob of code, produced by ‘vibe coding’. It is a fundamental difference if you ‘vibe code’ something for your hobby or for real. Think about safety critical code or critical infrastructure.

Critical Thinking in the Age of AI

Christine Anne Royce proposes good strategies for critical thinking:

- Promote Active Engagement With Scientific Data: instead let AI generate answers, interpret the AI-generated data yourself.

- Use AI to Facilitate Scientific Argumentation: Use AI tools to gather evidence for debates or arguments. Always ‘fact check’ the information.

- Require the Use of Claim, Evidence, and Reasoning (CER): Formally ask questions, form hypothesis and conduct experiments. AI can support this as a sparring partner or offering dynamic simulations.

- Frame AI as a Resource, Not a Shortcut: Don’t use AI to get a solution. Instead, use it as a discussion partner, to find your own solution. The way and thinking process is as important as the solution.

Unfortunately, this is not always easy to implement.

Learning in the Age of AI

“AI will destroy the critical thinking of the students. Most of them will just delegate their assignments and projects to AI (they already started doing this). Is also likely that many professors will delegate the verification and grading of those assignments to AI. It will be glorious: AI will do the homeworks, AI will grade them.” (LinkedIn)

I can confirm that students are heavily relying on AI for their assignments and homework. This is not a problem as AI tools and LLMs can be helpful and useful, if used correctly. It should be used for helping in the learning process, not about saving time or making a shortcut. To be relevant in the market, you need to have experience. And this means hard coding, making mistakes, learning from mistakes and improving over time. Learning a new programming language makes a lot of sense, even these days. Or should we stop learning walking, as robots can walk too?

Hybrid Classrooms

AI is here, and will stay here. But it has an impact:

“I’m a teacher at a university of applied sciences, and I’ve noticed a sharp decline in my students’ problem solving and critical thinking skills. Students can regurgitate knowledge without a problem, but applying it in new situations or contexts.. That’s a no go. And that means that they’re progressing much, much slower than my previous cohorts of students. Thankfully, while it took us a while to adapt, but now we’ve found a way to train students to think critically while still allowing the use of AI. However, this has made our educational track a lot more difficult for some students who, previously, might still have eked by just by sheer force of will.” (Reddit)

The area of AI of course has an impact on teaching. The concept of “Flipped Classroom” is not new. Many students don’t like it because it can be badly delivered. And compared to ‘classical’ class concepts it could more work for everyone. Students have to learn and read the material prior class time. So time in class can be dedicated for problem solving and critical thinking. About half of my course material is now in this format. Not everything worked well at the start, so I have constantly adapted the content and format. It is not the ‘textbook flipped format’ any more. I call it a ‘hybrid’ approach with pre-recorded inputs, followed by in-class assignments and group work. Let the use all available tools including AI for preparation. Classroom time is dedicated for group work, discussions and critical thinking.

“I was skeptical about the flipped classroom format at first, but the implementation was really great. Erich Styger has implemented a well-thought-out concept here.” (course evaluation 2025)

Shifting Assessments

In education, an important part art the assessments. In the age of AI, ‘everyone can write a good and convincing report’. And this includes any assessment created on student machines, as the result could be from an AI.

“We’ve avoided this problem by reworking all our tests into critical thinking applications. So they do verbal presentations, pressure cooker sessions where they need to apply their skills in new contexts, timed capture the flags on physical devices without access to the Internet, verbal assessments, they do classroom exercises where they give each other feedback and they brainstorm ideas together… It’s a fun solution.” (Reddit)

There has been a de-emphasis on things like writing reports or delivery of written content. Instead, I have shifted to more verbal assessments or classroom tasks instead. And student understanding is checked with verbal discussions and ‘defense’. This especially for the ‘critical thinking’.

Still, normal ‘tests’ exist and are used. But the ‘online/electronic’ tests have been reverted back to paper.

From e-assessments to p-assessments

For formative and summative tests assessments, I have used electronic or online tests systems. Things like SEB, MOODLE or ILIAS. Students would use their own machines.

In the age of AI, I consider such a setup as ‘insecure’ or ‘unfair’. Students can run trained AI models on their machines. Or used paid models for an advantage. Exams should not easily cheat-able, to make them equal for everyone. I see that universities invest a lot of money and time to respond to the ongoing challenge. They try to solve a technical problem with more IT infrastructure and technology. To me, this is a cat and mouse game, and not sustainable.

I stopped using (electronic) e-assessments. What I have started building up during the last months is going back to paper based assessments or p-assessments.

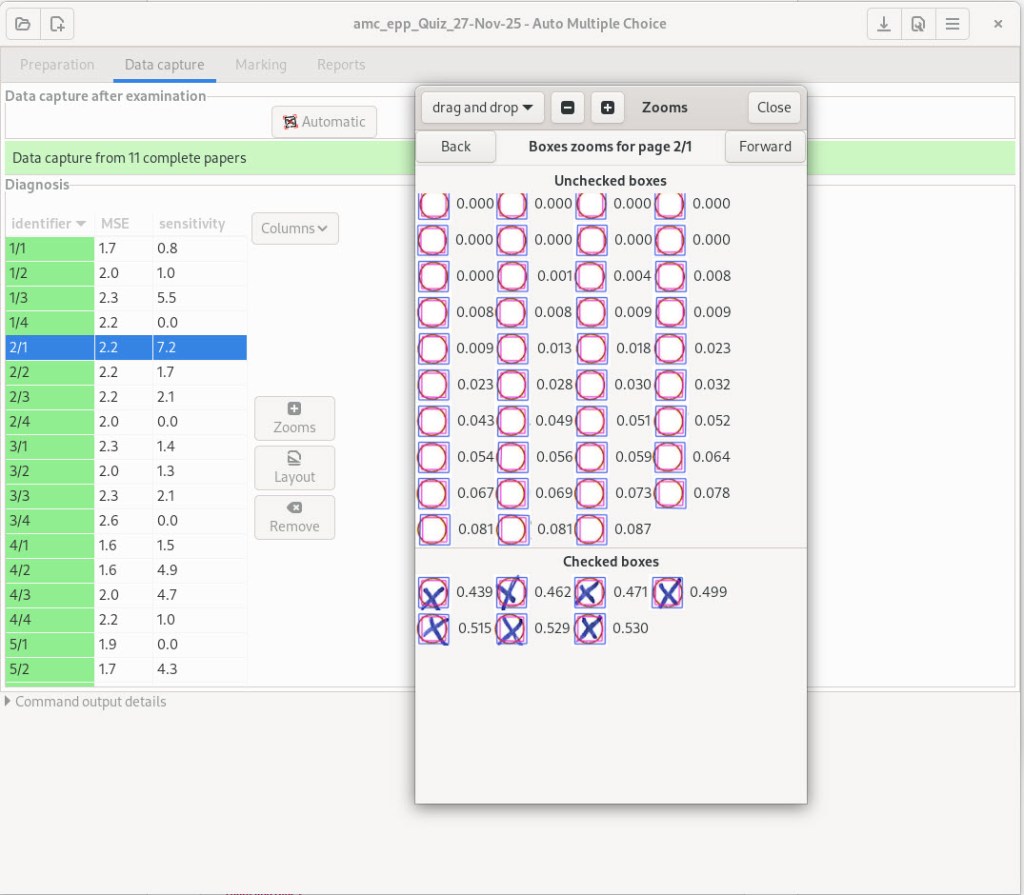

Paper Processing in the Age of AI

I’m using AMC or auto-multiple-choice this. It is a suite of open source software for exams or surveys. AMC is not based on LLM or AI, but it is a tool for the age of AI. It uses computer vision to analyze answer sheets. With this, a level of automation can be reached comparable to online tests.

AMC combines the benefits of e-assessments with paper based tests. This includes automatic grading of certain question types, like single-choice or KPRIM questions. No technology barriers for the students. Students only need a pen. No worries about broken notebooks, complex setup or incompatible software or tools.

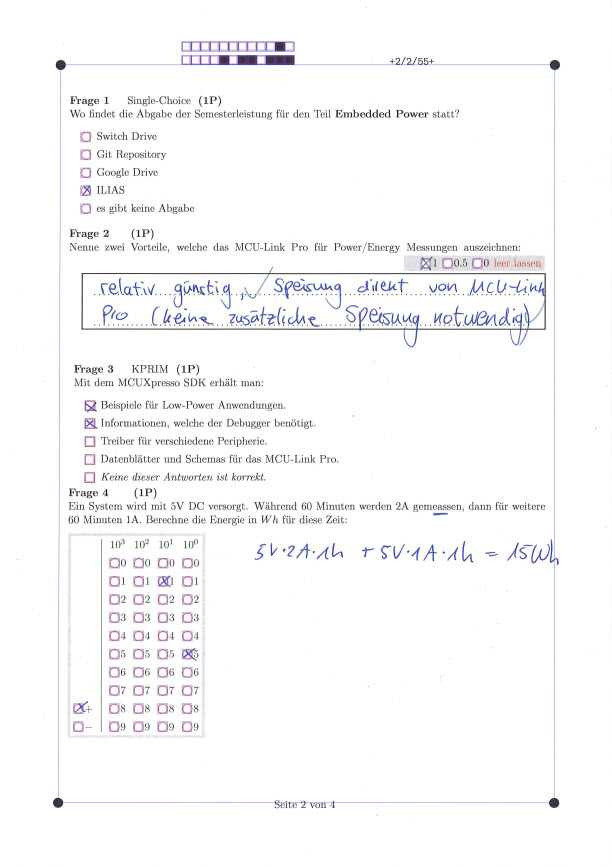

AMC based tests are written in LaTeX. Students use a pen (can be an erasable pen too) to write and mark the answers on paper. The sheet below is just for showing some of the capabilities and how it looks. It includes single-choice question, open question, KPRIM and numerical question types:

The paper sheets include special marking and areas to tick the answers. Text questions are graded on paper too (example question 2 above). Then all the sheets get scanned and an image processing software is finishing the marking.

I’m using it both for formative and summative tests.

“I really like the hybrid approach – we get to know the topic before hand. Then check how well we understood it with the test during class time. Also, the tests prepares and shows the expected look of the exam. I find that doing sample tests during the duration of the semester usually results in better performance on the exam. Another thing, I really like that we get to play and learn with actual hardware.” (Student feedback)

AMC is not directly related to LLMs and AI. It is a good tool for quick tests and assessments in the age of AI.

Summary

AI and LLMs are here to stay, and constantly improving. What I have describe here is the current state, and things might be very different in year from now. If the fundamentals remain the same as today, I expect only gradual improvements. There might also be an end to the hype. Back to more realistic expectations with a burst of the bubble.

But AI and LLMs won’t go away. They are machines, and they are good augmenting and assisting things, not completely replacing things. Coding with LLMs today is like using a jackhammer, generating large chunk of preliminary work. Then it is up to the accountable engineer, to get it in shape and refining it.

For all this, it requires critical thinking. Not only for junior engineers, but as well the senior ones. And with this, the education industry needs to change too: away from coding only, up to a more holistic role:

“The skill that’s gonna be most important to programmers is learning how to learn.” (Memfault)

That was already important prior the area of LLMs. Now it is mandatory. I am curious to hear what your opinion is, so please post a comment!

Happy LLMing:-)

Links

- Katharina Zweig: Weiss die KI, dass sie nichts weiss?: https://www.youtube.com/watch?v=YXo8hz2IkDk

- In The Age Of AI, Critical Thinking Is More Needed Than Ever: https://www.forbes.com/sites/roncarucci/2024/02/06/in-the-age-of-ai-critical-thinking-is-more-needed-than-ever/

- Memfault: https://memfault.com/resources/coredump-007-ai-open-source-and-the-future-of-embedded-development/

- Stackoverflow: https://stackoverflow.blog/2025/11/11/ai-code-means-more-critical-thinking-not-less/

- Los Angeles Times: https://www.latimes.com/business/story/2025-12-19/they-graduated-from-stanford-due-to-ai-they-cant-find-job

- OECD publishing: The Impact of Artifical Intelligence on Productivity, Distribution and Growth

- CodeRabbit: https://www.coderabbit.ai/whitepapers/state-of-AI-vs-human-code-generation-report

PS: Prof. Dr. Katharina Zweig has an interesting view about LLMs, about what they can do and what not. See for example Are Machines the Better Decision Makers? (English, from 2024), or from 2025 Katharina Zweig: Weiß die KI, dass sie nichts weiß? (German).

Great article, Erich! Managers and others in supervisory roles need to understand that although AI has improved greatly over the past few years, it is still prone to making spectacularly awful mistakes. And, as your students found, using AI in an attempt to correct these mistakes can be hugely non-productive!

AI is only as good as the data used to train it *and* the resources available to it for drawing inferences. I love AI and LLM tools, but only to help me come up with ways to sharpen my critical thinking. At least most of the AI tools I’ve tried have been willing to accept correction and incorporate it in further analysis (though we always have to be aware of hallucinations.)

Of course, this brings us back to the awareness that AI is far from infallible – and this is likely to remain a critical weakness for quite some time.

LikeLike

It seems to me that this oversimplification (“AI can do everything”) and false promises is leading to this wrong management decisions. The reality is not simple.

I believe we will need more engineers to cleanup the mess created by decision makers and the AI together.

AI and LLMs are just tools which have their pros and cons. And we have to be very careful to know the capabilities and boundaries.

And it is not only about the training data and interference: it is as well how good a model is tuned for a given task or use case.

And that’s what the core of engineering is about: using the right tool to do the job.

LikeLike

I’ve banned it in our [embedded] shop for multiple reasons:

AI code is interesting for small projects that have a very short life span so if and when (and it will happen at some point) it doesn’t work you can just throw it out and recode it. Basically it’s for makers, not professional coders.

LikeLike

Hi Randy,

excellent points!

1. You might have heard from the Anthropic copyright settlement (https://www.npr.org/2025/09/05/nx-s1-5529404/anthropic-settlement-authors-copyright-ai), costing them $1.5 billion because they have used copright material/books without permission. I did receive a letter too that they did steal copyright work from me too. I really hope that this is only the beginning and the ‘free stealing’ of content to train AI models will stop. Copyright and authorship has to be respected.

2. This is my impression too, and as well shown with the example I have in the article: AI code is hard to understand and really hard to maintain. At the end it will be a lot more work if it has to go beyond the ‘proof of concept’ or ‘quick mock-up’ stage.

3. I observe that young engineers or interns are using such code, and they think it is great because ‘somehow it works’, without understanding it. A senior Dev would never allow such code to get into the system.

4. For me it is amazing to see that senior devs usually ‘don’t trust the code of somebody else’, but without hesitation they trust AI code, because they believe it is better than anything else.

5. I think there is no guarantee. Our university had (has?) a very strict legal agreement with Microsoft about keeping things secret. Microsoft wanted to ‘sell’ the AI ‘bundle/option’. But the same time they made it clear that all these legal terms and boundaries will be void. The can ‘try’ to keep it secret, but no guarantee and no obligation. I think this is very telling how this is handled.

6. This is called ‘cognitive offloading’: the more you use such tools, the dumber you get, because you delegate our critical thinking to the machine. This is something I observe with students too.

I agree: such machines are useful for some hobby stuff, or for some ‘fun experiments’. Otherwise the harm can be really big.

LikeLike

In addition to that: the StackOverflow survey 2025 about AI usage is very telling:

https://survey.stackoverflow.co/2025/ai#sentiment-and-usage-ai-sel-prof

LikeLike