As time flies by, my projects are evolving. My lab projects get used over multiple semesters, and the MCUXpresso projects by default use the SDK version used at that time.

This is great because I do want to have control over what SDK is used. But from time to time it makes sense to upgrade a project to a newer version. In this post I’ll show how an existing project can be upgraded to use a new SDK.

First: make a backup of your project. I’m using git (which I recommend to everyone) for this. While things work pretty well for me migrating SDKs recently, it might not be the case for a particular (old) SDK.

In general it is not necessary to upgrade a project. But if I want to take advantage from latest bug fixes or features it makes sense. And in a class room environment I can be on the same latest and greatest SDK as my students are using. The other reason for upgrading is if (for whatever reason) the zip file for that old SDK is not available, but there is a newer version installed.

Migrating

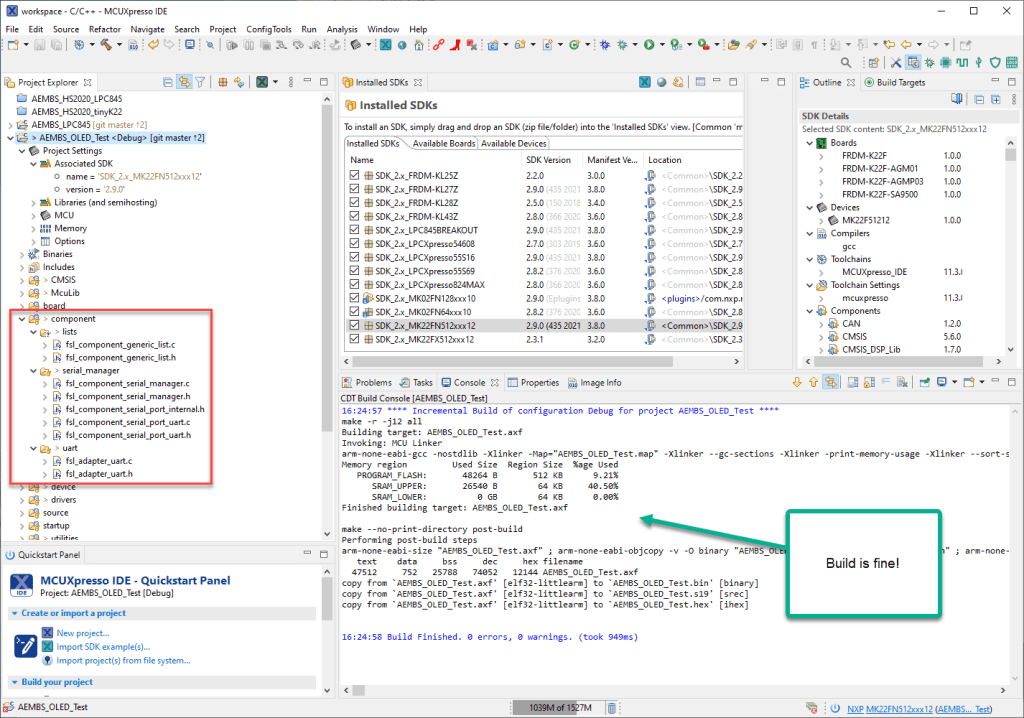

So here is my case: I do have an existing project on SDK v2.8.2 and I do want to upgrade it to the v2.9.0 version:

Part Support

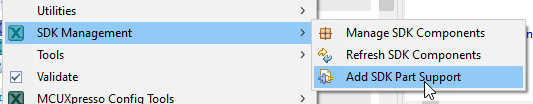

To make projects work without an SDK installed, I usually have ‘Part support’ added: that way for example the debugger has all the needed information. As a first step I remove the existing part support (if any) to have it clean:

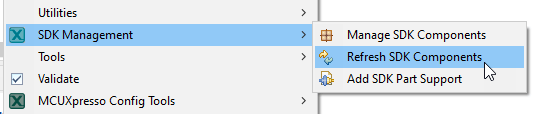

Next I do ‘Refresh SDK Components’:

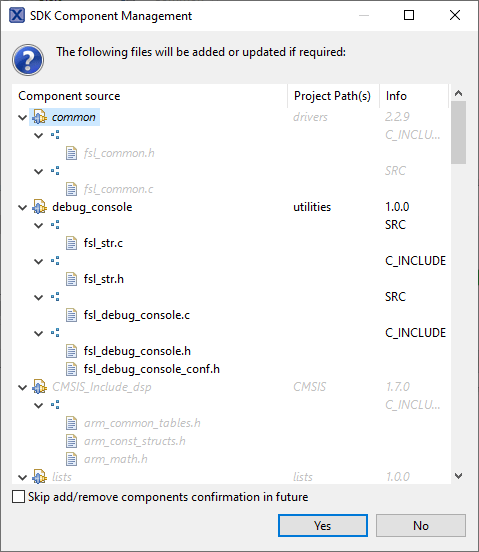

This will show a dialog about the updated/added files. Press Yes.

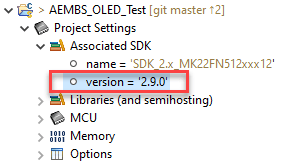

Now it should show that the SDK version has been upgraded:

So far so good.

Changed Files

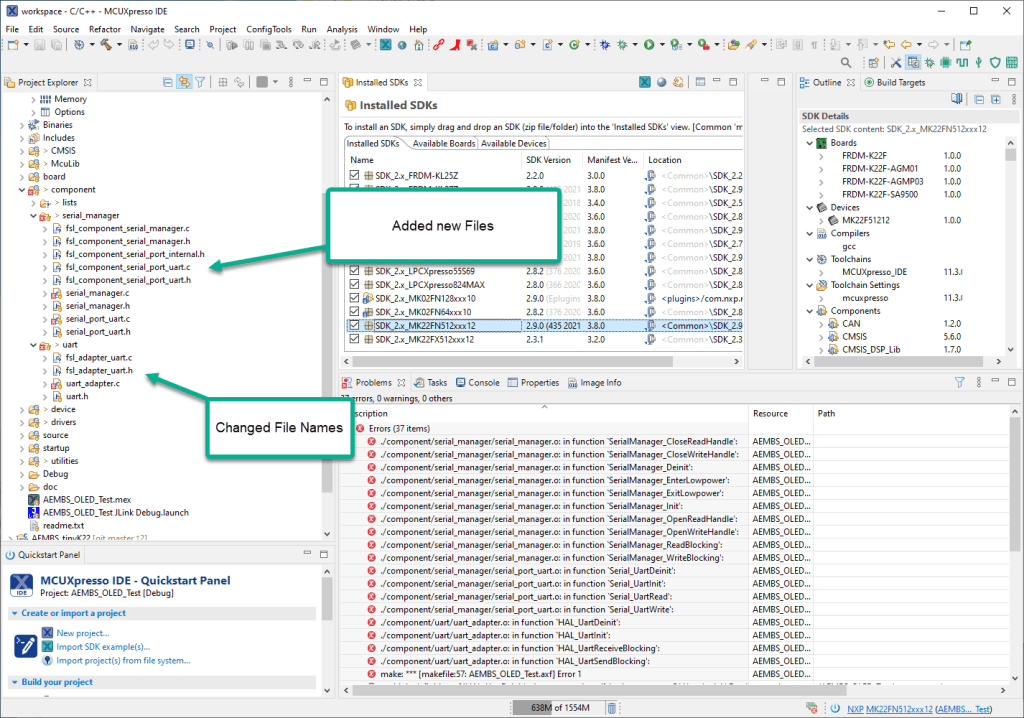

The next thing would be an attempt to compile the project. This might fail because most likely the SDK could have changed files as below:

As in the case above, the names have changed for a reason. However, the ‘Refresh SDK’ action somehow has not changed them too. So I do it manually: I delete the old files and now I’m able to build the project:

Part Support

Next I re-add SDK Part support:

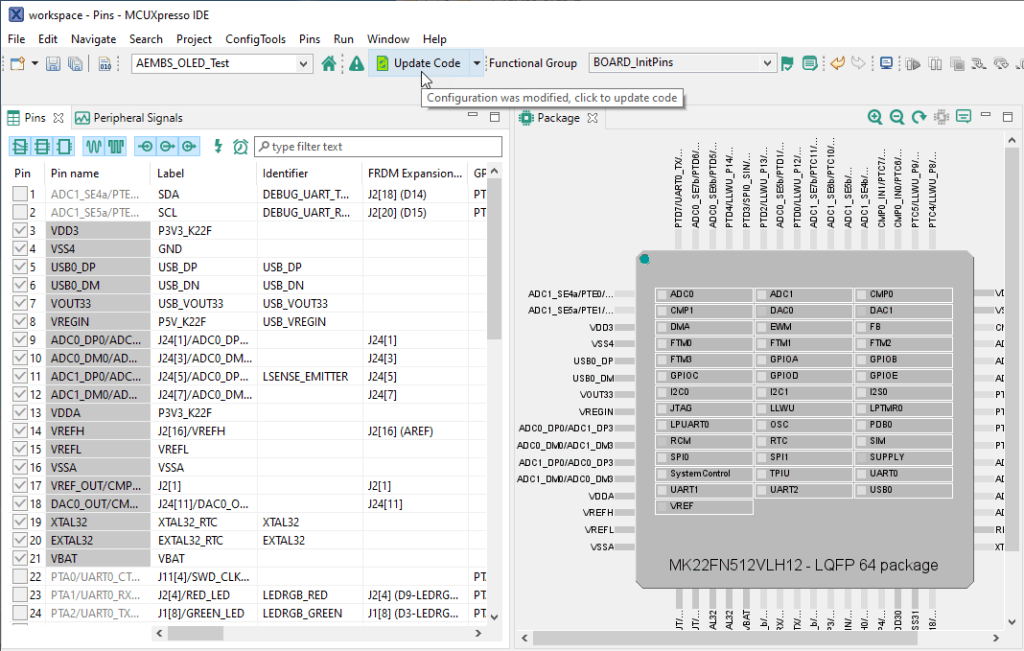

Config Tools

Next I do open the Configuration tools:

This triggers a dialog informing me that new data gets loaded which is fine:

Then make sure to update the code:

Confirm to update the code and you shall be all set :-).

Well, only if there are no API changes in the SDK. Luckily, the SDK has been stabilized the past year very much, so chances are high that things will just compile by now.

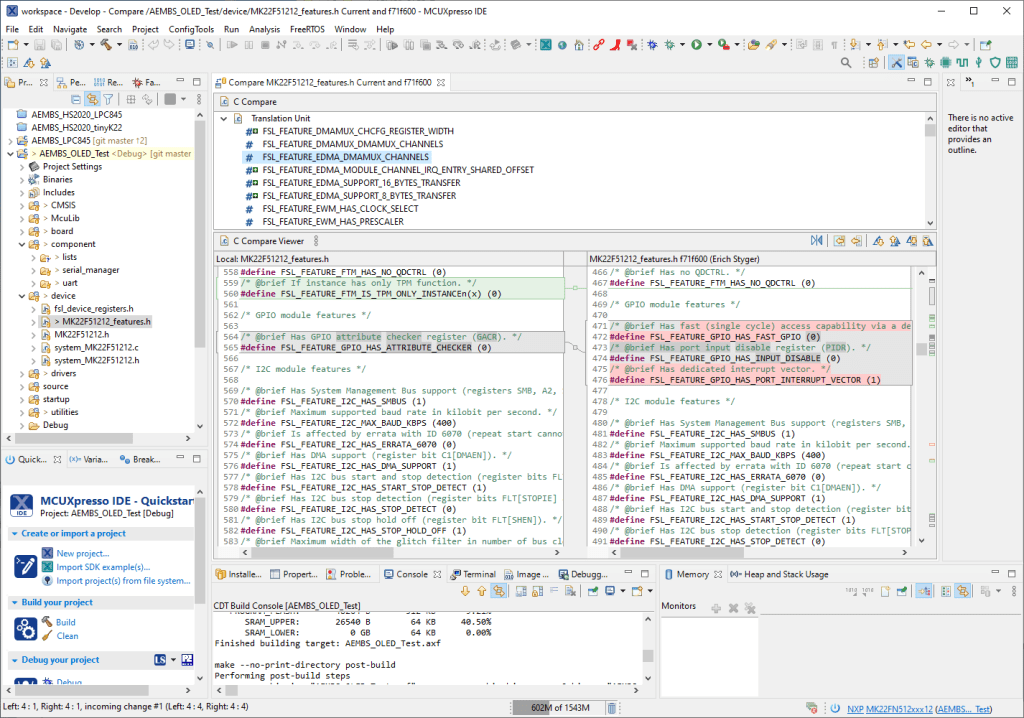

Differences

Now what is new in this new SDK? If using git with Eclipse very easy to make a compare:

Summary

Upgrading to a newer SDK Version is possible and can make sense (of course depends on the needs). Migrating a project which is still in development makes sense and with the above steps it is doable at least with minimal efforts. At least I’m doing this for my many class and lab projects and this worked fine so far.

Happy Upgrading 🙂

Erich,

What is your experience with upgrading the SDK during a project’s development? Based on my experience, I’m reluctant to make the change mid-development (or even after product release) because any changes in the SDK library may result in changes in operation of the project which have to be gone over and ensure that the project’s functionality hasn’t been affected. I’ve had more than one case where a fix for a problem in a previous library ends up causing problems with the application because of the code put in to handle the deficiencies in the previous library.

I should also point out that the way NXP handles part SDKs is a major dissatisfier for me and I feel like it’s a bait and switch. Very few parts have regularly updated SDKs and those that do tend to be used in Freedom and Tower boards. A developer tries out a Freedom board, finds it has a nice shiny new SDK that works well with MCUXpresso and decides to go this route. Then, when they choose a part that best matches their requirements, they find that the selected part’s SDK is woefully downlevel and the features they require that work well with the SDK they experimented with may not be available or are buggy with the selected part’s SDK. As the vast majority of part SDKs are not updated to the same level as the ones NXP is focusing on, these problems remain in the SDK indefinitely even though fixes are available in the SDKs that are kept at the latest level.

But, I’m not bitter.

LikeLiked by 1 person

>>What is your experience with upgrading the SDK during a project’s development?

As noted in the post, generally I don’t recommend changing the SDK. It really depends. But at least from the 2.7 ages of the SDK things are getting better. The good thing is that I use git as version control system and I can see what the changes are, if I do have any doubt. But it happened to me several times that we were about one month in a 6 month research project and then the new SDK which had some good changes came out, so I decided to switch.

I did not had much problems with issues fixed and then causing new problems. Probably mostly because we have built up our own library to be cross platform and cross vendor (from 8bit to 32bit, from ARM Cortex to Espressif and RISC-V) and they all use only the lower levels of their SDKs (not the other higher levels) and are based on a cross platform FreeRTOS port. That’s why we do not use the vendor FreeRTOS except the one from Espressif (because of the SMT port).

I do see the pain you mention as well with different parts on different levels: to me this is because of the vast and continued changes in the silicon and because there is no common or uniform abstraction. Other vendors in the range of NXP suffer from this as well: in the end they seem to focus on the silicon and not on the software. I believe this might change over time. To me, software and tools are the key decision factor and not the silicon itself. Something what is going very well in the Raspberry world: they kept the system working and compatible through multiple iterations (yes, they had a few problems, but it worked out). If they continue that strategy with the Raspberry Pi Pico, I’m all for it :-).

LikeLiked by 1 person

Wow that’s a lot of work!

I basically NEVER change, for stability. I know whatever issues exist and work around them, I don’t need a heap of new issues.

Our companies HCS12X projects haven’t changed since first builds back around 2005 on CodeWarrior.

It’s taken a while for me to even risk bumping the MCUXpresso version!

LikeLiked by 1 person

My currently oldest active project is for the S08GB60, dated back to 2006. Still doing some occasional maintenance, and it is using the ‘classic’ CodeWarrior with Processor Expert, and it still works :-).

LikeLiked by 1 person

Our oldest in production HCS12X product has a datestamp of August 2005 for when I first coded on it …

Mind you we are still shipping products that use the Freescale GP32 processor, developed back in 2001 and coded in assembler (I try hard not to have to update those products now since the dev tools are DOS based from the 1980s, but they do still run under Windows 10!)

LikeLiked by 1 person

Yes, the challenge is to keep the tools running. I still have in the shelf command line tools back from 1994 of a product which is still in the field. Did not touch it for a while, and I hope I don’t have to.

LikeLike

Since the Freescale days I’ve kept updated on your quest to use mcuxpresso while moving to another vendor, when my vendor updates an SDK it isn’t by the part but the family and they have migration software that makes it painless. I do have a project that uses NPX parts but I use Platformio not mcuxprsso, I’ve always resented the fact NPX bean counters took over the products when they purchased FreeScale, only $1,000 now for PE.

LikeLiked by 1 person

We’ve recently dropped Microchip from all our designs, mostly because they changed the MPLAB to $1500/year pricing (to get access to optimizations etc); I’m pretty happy with MCUXpresso pricing!

LikeLiked by 2 people

Yes, that $0 price point is very attractive :-). We moved away from using IAR and Keil because even with their university pricing it was not worth the money and all the extra paperwork. I’m ok with buying software tools, but I’m 100% against these monthly/yearly billing models.

LikeLiked by 1 person

I’m trying to think of a situation where I would consider paying for development tools.

If you look at MCUXpresso (or any manufacturer provided really), they’re based off a standard IDE platform (Eclipse for MCUXpresso), use the GCC compiler, use GDB for debug and the programmer manufacturer’s libraries. I realize that there’s a fair bit of work to integrate everything as well as provide extras like configuration wizards but it’s a pretty small fraction of the total package. n

I can’t comment on MPLAB – I haven’t looked at it for about five years. It used to be written and supported by Microchip but as people have gone away from 8bit MCUs, having a custom tool just doesn’t seem to be the right approach (and there doesn’t seem to be the same value in the modern world where there are several IDEs users and companies can choose from).

LikeLiked by 1 person

It seems to me that vendors are trying to charge for legacy tools because a) they know that you probably have no other choice or b) they want you to move to something new. As for the vendor tools: yes, they are pretty much packaged from other open source packages, but what they really add is the device specific parts which you don’t get from anywhere else, or only for the most common parts. I had this experience that for example OpenOCD only supports a few parts, but not the others I’m using too. Back to the point that there are probably too many variants, MCUs and SDKs ;-).

LikeLiked by 1 person

Having an SDK by a larger family is definitely a smart and convenient thing: most of the parts share the peripherals anyway. But still there are too many changes and variants to me in the silicon for no reason except extra pain and incompatible software. This is probably not much of a deal if you never change devices, but if you want to use different parts inside a family or a vendor the number of SDKs and version definitely gets in the way.

LikeLike

I upgrade every 2nd/3rd update. The further behind one gets, the more work. Upgrading has exposed issues in my code and/or in the sdk. Its good to know for the products sake.

I appreciate NXP’s sdk’s. Theyre very good but I do wish they were better documented and/or had more ‘hooks’ in them. I abstract them using C++ wrappers. I avoid modifying actual sdk files so I can easily swap in new sdks as they become available. More flexibilty would be helpful, especially with USB and CAN drivers.

Finally, I also use Segger Embedded Studio, 0$ until you go to production. I wish they would support SES out of the box like they do Keil/IAR and GCC. SES is close to GCC/Clang but has some subtle differences if using their linker, which is better than GCC.

LikeLiked by 2 people

It is always a balance between update frequently or seldom. I agree with the point about 2nd/3rd update and that it could be to much of a gap or work. I have big hopes that the way of NXP to share the SDK on GitHub (https://mcuoneclipse.com/2021/01/24/nxp-published-mcuxpresso-sdk-2-9-0-on-github/ ) could simplify things. But I feel until there is a good package manager and support for it I feel this won’t fly in the long run. Maybe off-topic: I’m using Rust in some projects and I *love* the way how it works over there.

LikeLiked by 1 person

I just read your article about NXP sdk 2.9 and Github. I especially relate to the zip file issues: the inability to know what has changed… save comparing files and the need to make small changes to some sdk files each time a sdk upgrade occurs.

I think when they open for contributions on Github, then I will do a PR. My changes are small, only a few lines, but enable the addition of another IDE, SES, which I think is proving popular. While I like MCUXpresso and use it for some projects, SES gives me native J-Link which only IAR/Keil give otherwise. Critical for some real time debugging problems.

Further, I digress, but I prefer MCUXpresso’s IDE, even though Eclipse is not my favourite IDE, I prefer it over IAR and Keil who’s editors leave much to be desired in this day an age. So really its a choice battle – great editor/IDE or great native debugging experience (as opposed to gdb). For me SES is the happy balance.

But at least I have NXP and their wonderful, poorly documented, SDK. I couldnt live without that! 🙂

LikeLiked by 1 person

I see SES especially popular around Nordic projects. I really love the Segger stuff, with the exception of SES which somehow never worked out for me. I believe Rowley did it for them, not sure any more. IAR and Keil tools are far behind with respect to usability and extensibility to me, but many companies are still using and paying them: simply because they get the paid support where in other places like for MCUXpresso you end up in community support. Eclipse has its pros and cons: Once you master it, you master most of the other tools in the industry because they share a common base. But the drawback is that it is using Java and honestly it did not had much of a technology upgrade recently. I’m using VSC for other parts and programming languages, and to me this is the future. But I guess that would be completely different topic ….

LikeLiked by 1 person

Yes, I agree for the most part. I too use VSC. I use whatever suits. Yes, SES is a whitelabel Rowley Crossworks product, but much easier to use that Rowleys generic offering.

One should consider SES for the following reasons:

1. The IDE has come a long way in past couple of years, it exceeds IAR/Keil in so many ways. Its only drawback, and its not so for me, is that it only supports J-Link. You cant use other debuggers, unlike crossworks/iar/keil.

2. The editor may not be as good as VSC, what is?, but it is pretty good nevertheless, including the ability to easily swap to VSC and back while in the IDE.

3. The native debugging experience, RTOS threads, Trace support, Profiling are as good as it gets and reliable. Doesn’t have some of the fancy tools that MCUXpresso has though.

4. The compiler is clang or gcc. I use clang c/c++ 20. The linker is optional: clang/gcc linker – with its horrible ld script or Seggers own linker, which is better at optimization and easier to write (and read) the linker script. similar to IAR.

5. They provide their own standard library, which while not as comprehensive as some GCC libraries, it is faster and smaller than GCC, comparable with IAR and Keil. Perfectly good enough for hardcore real time embedded.

6. Their support forum is good and I have had excellent support from Segger engineers on some pretty hard USB debugging issues.

7. Finally, cost, is free for hobby and non commercial/production use. It is fully functional, you only pay when you start shipping. The cost is far lower than the big two. Coupled with NXP’s great SDK and Middleware, the choice is easy for me. Hopefully pricing policies wont change.

Having said that, I think if MCUXpresso supported J-Link natively, then it might be a different story for me.

I will be going into production end of year or early next, so far so good.

regards,

LikeLiked by 1 person

Hi John,

very good points and thoughts.

>>Having said that, I think if MCUXpresso supported J-Link natively, then it might be a different story for me.

Not sure about this one as the MCUXpresso IDE supports J-Link out of the box and ‘natively’? Or what do you mean here?

Erich

LikeLike

Hi Erich,

As far as I understand, and correct me if I’m wrong, MCUXpresso uses a gdb server that supports j-link (and many others). J-link also comes with its own gdb server, which you can start independently. gdb talks to j-link and the IDE talks to the more limited gdb.

SES is free for non-commercial, but it ONLY supports J-Link. You cant for example use a PEMicro debugger. So as I understand it, MCUXpresso and many other similar IDE’s do not use the actual J-Link sdk, for which a license must be paid to Segger. That SDK provides native access to a J-Link enabled debugger. GDB abstracts the various native debugger protocols… a good thing I suppose, but in my view Segger is the best, so why compromise. Hardcore real time debugging and tracing are a staple of Segger and essential to the type of code I write.

There are subtle advantages to using native J-Link directly instead of native over gdb. These differences probably get less as gdb improves. Its one reason why Seggers Ozone app exists. I used Ozone a lot when I used VisualGDB inside VS. Its what is great about IAR and Keil, both drive J-Link (and other debugger protocols) directly. IAR C-Spy and Keil’s integrated debugger are simply the best in my book. (pity about their IDE). I was an IAR fan boy since the early 90’s!

I have read articles on this in the past both from Segger and IAR and Keil, but I cant find them off hand.

Im pretty sure im right, but happy to be wrong. 🙂

LikeLiked by 2 people

Hi John,

>As far as I understand, and correct me if I’m wrong, MCUXpresso uses a gdb server that supports j-link (and many others). J-link also comes with its own gdb server, which you can start independently. gdb talks to j-link and the IDE talks to the more limited gdb.

I think you have that one wrong: The MCUXpresso IDE talks to the ‘native’ GDB Server from SEGGER (C:\Program Files (x86)\SEGGER\JLink\JLinkGDBServerCL.exe). It does the same for the PEMicro one. Eclipse is the GDB Client which talks to the SEGGER GDB Server which talks to the J-Link probe. Maybe you mix this up with the fact that NXP does not provide a true GDB Server for the ‘LinkServer’ (MCU-Link, LPC-Link2) debug probes. But it is using the ‘native’ GDB Server/MI Protocol to both P&E and Segger, so nothing limited at all. You don’t need the Segger SDK to talk to the GDB server: you only will need this if you want to interface from your own application directly with the Segger DLL/library, this is not required for a debugger connection. SEGGER has documented their GDB server they provide as well, so it is very easy to script it or to use it from the command line.

I have not looked behind SES on this, but my understanding is that they do the same and talk to the Segger GDB Server, so no difference here I think.

LikeLike

Yes, you are correct and I miss-spoke, I meant DLL, not SDK. However, MCUXpresso uses a GDB server, but that is not ‘native’. To be truly native (and not limited) the IDE debugger must use the J-Link DLL directly, not via GDB and make use of all native (hardware debugger) functionality. Even Segger recommends this when using SES over its own GDB server. (which is a choice)

You are also correct in that the Segger GDB server has special non-standard GDB monitor commands that you can access from the command line. Unlikely to be supported by a standard GDB client such as MCUXpresso (I think). But I dont do command line while debugging… been there, done that, to be avoided! 🙂

I cant remember all the advantages of bypassing GDB and going straight to the DLL – performance/trace/stability/real time monitoring (no need to halt) being some. There are differences. They are not many, but they can be critical for some applications and I reckon its why IAR and Keil especially are so expensive, apart from their proprietary compilers/linkers.

Perhaps they are not critical for most people… For me I tried using my J-Trace with Sysprogs VisualGDB and Visual Studio… it looked like a great combination… great editing, but failed miserably in the debugging department. The issues were all to do with GDB being slow and not fully supporting the J-Trace. I gave up and around that time SES came to my attention. The debugging experience is as good as Keil but without the cost and crappy editor.

I also had major issues debugging my USB code and NXP’s USB middleware and driver code with MCUXpresso, issues that I did not have with SES. That was the final decider for me. Bottom line I want the best possible debugging experience AND a good editor. I don’t ask for much!

perhaps things have changed since?… perhaps I should re-investigate but I’m pretty happy with my current workflow. Sounds like a good topic for your next post! 🙂

regards,

LikeLiked by 1 person

On the Segger IDE, Nordic bundles it in their SDK, FREE to use with the SDK along with the jlink built into Nordics -DK boards. It is modified to work with Zephyr +Kconfig+west+git+ninja+python+pip+tcl basically the kitchen sink build system from #*%%. There has not been a working “intellasense” interface to zephyr, yet.

I sold all my Microchip ‘stuff’ 4 years ago, glad I did, their price leap explains why STM8 now has a CUBE IDE..I understand the STM8 is a popular china toy now.

LikeLiked by 1 person

The Nordic bundle is very attractive with SES, and we have used that in Nordic projects too. We do have an upcoming research project around Zepyhr where VSC is on the list of environments to use with it. I really love how VSC has added the Intellisense for Rust.

LikeLike

I nearly chose Zephyr over FreeRTOS. It did not seem mature at the time. Would be interesting to see in action. Even more interesting would be the VSC debug environment. I look forward to it.

LikeLiked by 1 person

Currently I still choose FreeRTOS over Zephyr mainly because FreeRTOS is so much easier to use and very, very robust. I feel it might still take some time until Zephyr can make some real traction. If the Zephyr group would not have decided to ‘invent yet another RTOS’ and used FreeRTOS for their system it would have been a no brainer for the industry to adopt and use it. Because several concepts in Zephyr are really good, but they do not require a new OS imho. About VSC: you probably have noticed already that I started with that: https://mcuoneclipse.com/2021/05/01/visual-studio-code-for-c-c-with-arm-cortex-m-part-1/ . I’m building that up right now, but it might need some time because I do have other things on my plate too.

LikeLiked by 1 person

Yes, I have been following your Rust and VSC articles. I too played with RUST sometime ago, just tinkering. I tried setting it up for debugging with VSC but did not get as far as you did. Not convinced Rust is going to replace C/C++ anytime soon though. 🙂

I really look forward to seeing how you go with VSC/ARM debugging and whether it will challenge my current workflow. I love VSC as an editor. I do all my Python/GRPC work using VSC with full debugging. Its great.

I have always had access to Keil/IAR etc… cost has never been an issue. Its all I used until we switched to NXP and i.MXRT (which I also love! and the reason I came across your blog)

My ideal would be:

VSC editor OR Visual Studio

Language of my choice – C/C++ for now

Compiler and linker of my choice – I would probably choose IAR’s compiler/linker

Fully integrated J-Link debugging including Trace (SWO/ETM) and RTT

FreeRTOS Thread awareness

Live thread and value monitoring including execution profiling.

No command line stuff!

LikeLiked by 1 person

Hi John,

Rust is interesting not because it is widely used in the industry (especially Embedded) but because engineers love it. And the language is really great too. The other interesting thing is that I know mid/large companies they start hiring engineers for Rust: they have troubles to get good C/C++ programmers to write good and secure code. Plus they realize that the ones familiar with Rust are probably the best and brightest ones too :-).

>>I have always had access to Keil/IAR etc… cost has never been an issue.

That’s a good place to be, but this is not everywhere. I’m not against paying for tools and I do. But if I can get the same or better for less or no $$, then the choice is easy. I believe many companies might be using proprietary tools just because of it is easier to stick what they had for years and do not realize that things might have changed.

As for your ‘wish list’: I hope I can cover most of it, thanks for the list!

LikeLiked by 1 person

That is a fascinating insight about Rust! My Engineering manager, a hardware guy, introduced me to Rust and he is one brilliant engineer, so your hypothesis holds true! 😁 I am looking for a junior to mid embedded now, so I will query their Rust knowledge!! They better know it!🤪C/C++ people, let alone real time embedded, are increasingly difficult to find.

You are right about corporations sticking with their tools. But I think it is also because most embedded companies and firmware engineers are unaware of how good the Visual Studio/Code development environment is. I do have a strong background in desktop and web development. Which is why I now object to Keil and IAR. I have been spoilt. If those companies were smart they would simply develop plugins for VSC. I would do it if I was younger!

Embedded IOT these days is as much about firmware as it is cloud and services. One needs to be across both in my view.

Regards

LikeLiked by 1 person

(maybe this discussion should happen in the Visual Studio Code article …)

I agree with you. My take is that more and more modern software development techniques is coming from the desktop world to the embedded world. It is shocking to see how many embedded development teams still are not using version control systems for their daily work. Software development has been changing over the last couple of years (version control, cloud, networking, containers, scripting, package manager, …) but takes slowly on the embedded world. It is an interesting time.

LikeLike